The Shelf Life of Digital Scholarship

Let me take for granted one claim: That the new work of composing is the work of composing new media as a form of scholarship, where new media are more than objects of inquiry. They are the very means through which “new composers” do work, write, teach, and research.

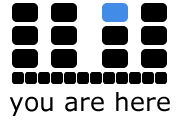

This assumption about the future of scholarly work raises important questions about its shelf life—about how academic projects are organized, accessed, and archived—actions which fall under the all-encompassing umbrella of “metadata,” or “data about data.”[1] Of course, scholars already have at their disposal a wide array of metadata standards that address shelf life issues. However, the new work of composing demands that “new composers” become increasingly aware of how these readymade standards emerge. Such an awareness suggests that practitioners should routinely consider metadata’s role in classifying information as their digital projects iteratively develop.

An alternative to adopting a readymade standard is what, in a Latourian fashion, might be called a “Standards in the Making” (SITM) approach to composing with metadata in mind. This approach implies not only understanding how existing metadata standards (e.g., Dublin Core) are extensive, but also determining what standard is best for a particular project, even if that decision requires creating an entirely new schema (e.g., the tagsets designed for the Orlando Project for Women’s Writing in the Britsh Isles).[2]

For the purposes of this chapter, what interests me the most is precisely this creation of new schemas—and not at all because I find metadata standards unproductive or unnecessary. To be clear, we need data classification, and we need metadata standards. After all, scholars and publics alike require metadata standards to ensure interoperability and accessibility, and all too often the new media we study and compose do not adhere to these standards. That said, SITM emphasizes the very acts of classification that subtend and foster the emergence of a standard. It pressures how metadata influences the way people receive information, organize it, and mobilize it in knowledge-making. In short, it stresses information’s ecology, or how classification systems—as cultural formations—resonate, clash and create space for ambivalence, exclusion and inclusion.

Put this way, the new work of composing is simultaneously media-making and model-making. It is a matter of:

Presenting scholarly work through new media that enact an argument, and

Articulating how that enactment is a process persuasively designed.

By attending to the function of metadata while media and models are being made, practitioners can better consider how projects are stored, reproduced and revised, thereby extending the life of a project beyond, say, its publication in a journal or a book.

[1] On the question of when a digital humanities project is “done,” see the special cluster, “Done,” in the Spring 2009 issue (edited by Matthew G. Kirschenbaum) of Digital Humanities Quarterly.↑

[2] The Orlando Project describes the role of its tagsets as follows: “In its early phase the Orlando team created a new SGML application for encoding the text it was to produce. To produce the Orlando history, it created four main document type definitions (DTDs) or tagsets. These structure our information and embody our literary theory. They are the key connection between the literary and the computing in Orlando. Their distinctive feature is their relative emphasis on ‘semantic’ or ‘content’ tags. Produced in extensive analysis involving the literary and computing members of the Orlando Project team, the tagsets are the key connections between the literary and the computing sides of Orlando” [emphasis added]. SITM is similarly invested in examining how metadata structure information, embody theory, and become keys between culture and computing.↑