Chapter 10

Assessing Civic Engagement:

Responding to Online Spaces for Public Deliberation

Meredith W. Zoetewey, W. Michele Simmons, and Jeffrey T. Grabill

![]()

ABSTRACT

Increasingly, professional writing and visual rhetoric courses include a community-based project on developing civic web sites. An important component of civic web sites is their usefulness. However, the need to evaluate civic Web sites designed for usefulness comes prior to instructors having the information required to gauge how audiences makes use of the web site. Drawing from the concept of productive usability and Patti Lather’s notion of catalytic validity, this chapter investigates the role of evaluation in supporting end user agency via online tools and offers an evaluation framework that can scale in time and space to enable course-based and community-based judgments.

![]()

How do we evaluate the usefulness of civic Web sites at the point that students need feedback or a grade? Some of the strongest service-learning projects we assign ask students to craft what we’re calling civic web sites—community-based digital spaces that can be used to enable public deliberation. Web sites that fulfill a civic function usually connect to local interests that range from the commonplace (e.g., watershed education and research in Oxford, Ohio) to the mildly annoying (e.g., restrictions on transporting firewood in Lansing, Michigan) to the potentially catastrophic (e.g., shoring up against storm surge in Tampa, Florida) and everything in between. Often conceived in response to government agency-issued educational mandates, civic Web sites can spur extremely rewarding client–student partnerships. For example, the U.S. Environmental Protection Agency requires all municipalities to include a “public education and outreach component,” most often in the form of a web site, that addresses stormwater pollution prevention. However, the value of these web sites is not always evident within the time frames of a semester, making evaluation tricky.

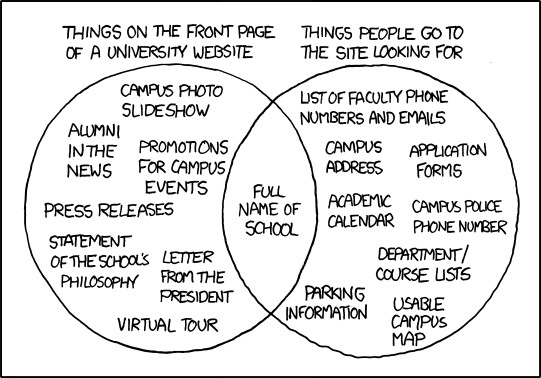

Although we can and should guide students as they conduct formative usability testing in the hopes that the civic Web sites they develop will ultimately be useful for the citizens who consult them, instructors don’t have the option of waiting to see how end users will actually make use of the web site before we must assess projects, assign a grade, and provide summative evaluations for students. The concept of “usefulness” is important to our discussion. Barbara Mirel (2004) defines usefulness as a quality that enables users to do better work, not just to use an application more easily. To illustrate this crucial distinction, we can look close to home at university web sites. Randall Munroe’s xkcd, a popular Web comic, featured a Venn diagram with two overlapping data sets: “Things On The Front Page Of a University Web site” and “Things People Go To The Site Looking For.” Only one intersection exists between both circles, the full name of the school (see Figure 1).

Figure 1. University Web site from xkcd.

Shortly after its release, this comic was profiled by Steve Kolowich (2010) in Inside Higher Ed for its resonance in academic circles and its potential to impact marketing communications approaches. Kolowich reported that this comic speaks to a serious disconnect between what administrators want to say and what students actually want to do with the university’s “strongest marketing asset: the institutional Web site’s homepage.” University web sites are almost always the result of careful planning, and represent a significant investment on the part of the institutions they represent. These considerable resources often produce university web sites that are efficient (i.e., tasks are performed quickly), learnable (i.e., it’s easy to learn how the system works), memorable (i.e., it’s easy to remember how tasks are performed), and comprehensible (i.e., users make a minimum of errors). In short, they are extremely usable. But visitors are still left unsatisfied because a usable Web site is not necessarily a useful Web site. A useful Web site would enhance the quality of the work experience and support complex forms of work.

Picking up on this discrepancy, scholarship in Rhetoric and Composition and Professional Writing has considered the ways in which civic Web sites that are useful, in addition to being usable, have the potential to aid change in communities (Grabill, 2007; Simmons & Grabill 2007; Simmons & Zoetewey, 2012). As we all know well, “change in communities” is a challenging concept that in the best of circumstances takes time. What we haven’t addressed, therefore, is how to negotiate an understanding of usefulness that must unfold over time with students’ need for timely evaluation in a course. Because the need to evaluate civic Web sites designed for usefulness comes prior to our having the information we really require to gauge value, we are left to make assumptions about how useful the digital work ultimately will be for end users. How do we determine usefulness at the point where students need feedback and a grade?

A word here about evaluation versus assessment: We are working with a commonplace distinction between evaluation (making summative value statements about student performance) with assessment (a broader term including formative decisions about outcomes). We focus here on evaluation rather than assessment because we are concerned in this moment with the reality of assigning a grade at the end of a semester that adequately reflects the success of the process involved with developing a web site that enables the kind of engagement, productive inquiry, and change that we equate with productive usability. We do see, however, that the heuristic we offer might be transformed into an assessment rubric with modifications. Indeed, given the relationships between assessment and evaluation, there should be criteria-based relationships between and among the assessment tools used in relation to a civic web site project. Therefore, readers will see us using both terms because of the necessarily tight relationships between assessment and evaluation. We are interested in developing tools that enable use-oriented assessments framed by community need but that also allow course-based evaluations.

To consider these intersections, we look to a number of concepts drawn from our prior work and from empirical research methodology (e.g., Lather, 1986) to build an evaluation framework that can scale in time and space to enable course-based and community-based judgments. This chapter offers the field another means of cultivating civic participation by investigating the role of evaluation in supporting end-user agency via online tools. To ground our discussion, we introduce each of our criteria in terms of a real course assignment and deploy each assessment measure in turn. We conclude with a heuristic that other writing instructors can tweak and apply to the evaluation of their students’ web sites. We’ll begin with our assignment because, as Ed White (1999) reminded us, assessment does not begin when you start to read the student writing, but rather when the assignment is made.

THE ASSIGNMENT

The Online Design Project, a capstone assignment from Michele’s visual rhetoric course, began with an important reminder: “While principles of good design can be applied to all document design projects, designing effective online projects requires additional strategies. Accessing, assembling, and making use of information online is more complex than it is in print and online design must accommodate these differences.” This 400-level course is a core requirement in the Professional Writing major, but also enrolls students in other majors across the university, such as Strategic Communication and Interactive Media Studies. Through community-based projects, the course introduces theories and strategies of visual design and usability that culminate in print and online documents such as brochures and web sites for non-profit organizations. This project asked student teams to develop a Web site for local, community partners, most recently nearby Hueston Woods State Park. Hueston Woods is the site of ongoing watershed data collection by Miami University’s Ecology Research Center.

The undergraduate and graduate visual rhetoric students at Miami University were charged with designing web sites that would educate and engage local publics, especially K–12 students and teachers. The students were primarily novices in their first visual rhetoric course. The course objectives that the project satisfies are familiar to many service-learning projects: Students will (hopefully) walk away with the ability to critique, plan, and design professional-caliber online material. The components of the project as well as the learning outcomes that help students achieve those objectives are also fairly customary: Students are required to critique similar web sites adopted by analogous organizations in a web site design inventory assignment, design multiple wireframes that illustrate the potential to engage multiple audiences in specific ways, diagram their proposed site based on early feedback from the client and users, develop a model to share with the client and end users, revise their site in light of usability testing, and compose a recommendation report that discusses how their design and content decisions address user needs and client purpose to advocate for its adoption by the client (see Appendix for the original assignment sheet). When it comes to evaluating assignments like this one, however, we find conventional evaluation measures may not be adequate.

CONDUCTING USABILITY EVALUATIONS

Why Conventional Usability Evaluations Can Fall Short When Evaluating Civic Web Sites

As teachers of web design, we’ve often looked to conventional usability measures to help us assess student work on web sites. We mean no disrespect with our use of the term conventional: conventions and standards have staying power because they are reliable and useful (for example, see Krug, 2006; Nielson, 2000). Conventional usability principles frequently prioritize simplicity over complexity, scanable content, and faster downloads all in the name of efficiency (see Simmons & Zoetewey, 2012 for more on conventional usability’s features). And for the majority of online sites, these are sound usability strategies. In many ways, conventional usability’s strength lies in its broad applicability. But civic web sites might resist this one-size-fits-all usability approach. Many citizens come to civic Web sites for education, and they will likely find synopsized content especially scanable and usable. But online spaces that fulfill a civic function can potentially serve citizens whose interests extend beyond the educational goals so often privileged by their sponsoring agencies—and those user needs may fall outside conventional usability’s scope.

Consider, for example, the citizen who approaches a civic web site with the goal of shaping rather than understanding established public policy. To challenge established, accepted interpretations of complex scientific and technical problems is no easy task. Simply penetrating the specialized discourses surrounding technical issues is an uphill battle. So, for our hypothetical citizen to participate fully in the decision-making process, she would need to cultivate the specialized literacy practices valued by those experts—that is, talk the talk—not “something that is clear enough for beginners” (Krug, 2006, p. 140). She would need unfettered access to unmediated data, not “short paragraphs, subheadings, and bulleted lists” (Nielson, 2000, p. 101). A conventionally usable web site would probably not be especially useful for this citizen, though it might be efficient, learnable, memorable, and comprehensible.

So, in recognition of this gap where conventional usability principles may not accommodate the needs of some users, we advocate assessing for productive usability, a refinement of conventional usability. Productive usability emphasizes the primacy of usefulness and alternative use at all stages of the development cycle. It requires us to examine and test for patterns that support those features we found to be of particular importance to actual citizens looking to formulate their own impressions of the data that impacts their communities. Our notion of productive usability can be traced back to a multi-year empirical study comparing data from six different environmental web site development projects for two midwestern counties (Simmons & Zoetewey, 2012). To better identify those web site features citizens prioritized in their work, the study included over 60 users discussing what work they wanted to accomplish with web sites related to stormwater pollution, recycling, watershed protection, sewer management, and invasive plant management. This data became the basis of productive usability.

Although it may be true that only time will tell how truly useful a web site is for users attempting to impact technical public sphere issues, there are aspects of productive usability that we can incorporate into our web site assignments and assessments that afford usefulness. If we foreground these affordances from the beginning of our civic web site assignments and keep them central throughout the project, students are more likely to develop the kind of transformative civic web sites that enable communities to do work that matters to them. In this next section, we will demonstrate how to evaluate web sites for productive usability by walking through student work produced in response to a civic web site development project. Before presenting these Web sites, we should clarify that the sample web sites we use to ground our framework in for the rest of this chapter aren’t intended to act as models; rather, they are examples. As we discuss our evaluation criteria, we’ll talk about the ways in which these examples illustrate individual aspects of productive usability.

Criteria to Evaluate for Productive Usability

To evaluate for productive usability, we can:

- Look for evidence of concern for alternate use. Alternative use can be conceptualized as any use that enables any user to do work important to them, even if that use lies beyond client concerns.

- Look for evidence of concern for technical literacy in support of productive inquiry. Technical literacy helps to enable productive inquiry but Web sites must evaluate for each.

- Look for evidence of concern for useful interactivity in the form of engagement, psychological interactivity, emotional connection, and exploration.

These attributes are at the heart of productive usability and can be traced back directly to an empirical study we conducted to determine which features citizen end users needed to do their work online (Simmons & Zoetewey, 2012). All characteristics support epistemic or knowledge-making work and the action it authorizes. For example, citizens in the earlier study were not always approaching civic web sites for education (i.e., information) about watersheds. Sometimes they instead were searching for what actions they could take to reduce the amount of contaminants in their water. Our evaluation categories are thus not especially discrete; they overlap to inform and shape each other. Finally, as a way to tie together these three attributes, we draw on Lather’s (1986) notions of validity, particularly her concern for the “catalytic” practices and outcomes of the project.

Evaluate for Evidence of Concern for Alternative Use

Education is often the client’s first concern in that civic web sites are often conceptualized as a response to a government mandate (think here of the EPA’s mandate for stormwater pollution prevention education mentioned earlier). End users, however, visit civic Web sites with their own agendas—agendas that can fall outside the client’s field of vision for using the online space. Students can tease out the alternative uses citizens bring to civic web sites by consulting with a more diverse but relevant user group than may be designated as the “prime” users. This research can occur early and often during the development process. The key point is that concern for alternative use requires process documentation, and this documentation must be built into the assignment itself.

Because our alternate use criterion is process-oriented rather than production-oriented, evaluating for it means instructors must require and review documents that support the production of the main deliverables for the client (the web site and the recommendation report), such as research planning and analysis documents. For example, consider a project log not unlike the one described in Professional Writing Online (Porter, Sullivan & Johnson-Eilola, 2008)—this is a standard, multi-purpose model that requires students to record information about each work session, plans for future meetings, and information about work completed outside of formal sessions. Porter et al. value these logs for their ability to provide evidence of progress for upper management and to facilitate report writing. We think they also have the potential to support civic web site development with an emphasis on alternate use with a few productive usability-specific tweaks. In addition to collecting information regarding particular work sessions organized around participating student team members, work logs could be enlarged to focus on prime and secondary users groups and their priorities (see Table 1).

Table 1: Work Log for Civic Web Site Development Project

| Current Session | Next Session | Work Completed Outside Formal Sessions |

|

|

|

At the point of evaluation, these logs could be triangulated with the recommendations put forward in the report for the community partner. In other words, we think work logs provide necessary process evidence that needs to be reflected in the argument that the teams make to the client about why they have made the decisions they have. Constructing work logs in this way helps keep alternate use central throughout the development process, from initial research into secondary uses that are most likely not on the education-driven community partner’s radar.

So what does concern for alternative use look like in practice? Returning to the Hueston Woods State Park project, the community partner (the Ecology Research Center) was comprised of local college faculty. Their priorities for the proposed web site were complex and multi-layered. They wanted a web site to point potential donors to their vision for the education center they hoped to fund through grants and private donations. Other priorities included creating a sense of community through engagement and telling a “story” about the watershed to K–12 teachers and students. Their agenda is somewhat unusual given the ERC’s desire for a narrative and their openness to different types of narratives regarding the watershed. Teams of students interpreted the story of the watershed differently. Some shared the history of the watershed itself. Others told stories about the Ecology Research Center faculty and how they became interested in the watershed project. Others asked visitors to submit their own stories about their experiences with the Hueston Woods State Park (more on this soon). Balancing the needs of primary users, K–12 teachers/students, with the desires of secondary users, such as serious fishermen or anyone looking to take their children fishing on any given Saturday, is difficult. Secondary users might approach the Hueston Woods Web site looking from real-time data on the amount of dissolved oxygen in the water and the temperature of the lake, both of which strongly affect that day’s catch. A sample of what a work log entry might look like (see Table 2) helps keep those overlapping uses for the Web site central throughout the project.

Table 2: Sample Work Log Entry

| Current Session Note: this example entry considers users outside the view of the community partner |

Next Session Note: this example entry considers the community partner’s primary audience |

Work Completed Outside Formal Sessions Note: this example entry considers users outside the view of the community partner |

|

|

|

Evaluate for Evidence of Concern for Technical Literacy

in Support of Productive Inquiry

Technical literacy (Kinsella, 2004; Simmons & Grabill, 2007) refers to language practices that enable citizens to understand and/or talk about technical public sphere issues with experts in meaningful ways. To participate in the work of making public policy—not just to understand it but to participate in it, to shape it, and to challenge it—requires citizens to analyze technical subjects and use specialized language. Without technical literacy, citizens cannot engage in productive inquiry. Productive inquiry is the process by which citizens formulate the questions and statements necessary to discuss or dis/agree with scientific findings in meaningful, credible ways. Productive inquiry supports the kind of symbolic–analytic work that Johnson-Eilola (2005) and Reich (1992) advocated as necessary for citizens to bring about change.

To support citizen knowledge work, we can evaluate civic web sites for the presence of:

- Technical Glossaries: Students can feed civic agency by helping citizens “talk the talk” of technical experts. Without this language, lay people may find themselves and their concerns dismissed by experts.

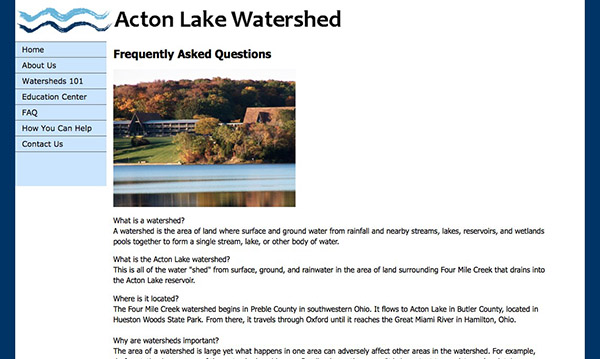

- FAQs: Likewise, frequently asked questions help users learn the discourse surrounding a particular topic, enabling citizens to perform in debates, be taken seriously in discussions with specialists, or simply learn the language to investigate issues further on their own. Students developing the watershed education center web site, created such a FAQ page, including an invitation near the bottom of the page for users to contact one of the center’s faculty if they had additional questions (see Figure 2).

Figure 2. Enabling Technical Literacies with Frequently Asked Questions.

- Intuitively organized, primary source materials: Examples include full, unedited technical reports, complete technical data sets and/or real-time data with supporting methodology. Students’ first impulse may be to synthesize or gloss over the gnarliest data or methodology in the name of predigesting content for their harried, imagined readers who don’t have time for “minutiae.” The problem with this well-intentioned impulse is that it denies the public an opportunity to cultivate scientific competence (Wynne, 1991). Findings, when summarized in reports prepared specifically for non-specialists, leave citizens powerless to critique the methods used to arrive at those findings. Access to unmediated source materials can support citizens who wish to challenge the assumptions, systems, and institutions that sanctioned the work in question. To support productive inquiry in our project, students included links to real-time data about water temperature and dissolved oxygen on pages about watershed education that would appeal to fisherman interested in fish quantity, school children learning about aquatic life, or local residents interested in climate change (see Figure 3).

Figure 3. Enabling Productive Inquiry with Access to Real-Time Data on Water Temperature and Dissolved Oxygen in Lake Acton.

Evaluate for Evidence of Useful Interactivity in the Form of Engagement, Psychological Interactivity, Emotional Connection, and Exploration

Web sites of all stripes are filled with interactivity, from basic, click-able links to write-able forms. Useful interactivity in civic web sites goes beyond basic engagement to help citizens explore, investigate, and solve problems that are of interest to them. Useful interactivity builds on existing theory to bring together a desire to support social participation by incorporating concepts such as engagement (McGonigal, 2011), psychological interactivity (Manovich, 2002) to encourage knowledge building, emotional connection (Wysocki, 2001), and exploration (Wysocki, 2001). Evaluating for useful interactivity helps designers position publics as producers of knowledge. As the work we have referenced demonstrates, publics are likely to be producers if they are engaged, emotionally connected, and supported. These concepts work together to afford a type of civic interaction. Incorporating features that support useful interactivity requires a type of thinking that goes beyond conventional usability and web design maxims. It requires seeing the audience in context, considering how the information relates to a range of stakeholders, and positioning them as active participants, capable of exploring their own interests.

Engagement as agency and activity. Jane McGonigal (2011) illustrated how engagement can feed agency and activity in her book, Reality is Broken: Why Games Make us Better and How They Can Change the World. McGonigal described how editors at The Guardian in London created a game-like approach for soliciting Britons to help sort through millions of expense forms and receipts to identify fraud in expense claims from members of parliament. McGonigal noted how this crowdsourcing enabled citizens to review 170,000 documents in 80 hours and was “a way to do collectively, faster, better, and more cheaply what might otherwise be impossible for a single organization to do alone” (p. 220). This approach has value for productive usability beyond collecting data for an organization; it positions the audience as active participants—56% of visitors participated in the project. From a users’ perspective, the web site valued their contribution and their knowledge-making; from an organization’s perspective, the Web site connected with the audience, got them involved in the project, and for many, brought them back. Engagement can be game-oriented, but certainly does not need to be. Evaluating for useful interactivity means looking for features where the audience is engaged, allowed to explore, encouraged to connect information to their day-to-day lives, and invited to build new knowledge. According to McGonigal, the web site included a section entitled “Data: What we’ve learned from your work so far” that reinforced participant efforts and provided them with what McGonigal called the “I rock” vibe that gives users a sense of purpose and impact. Certainly when we assess civic Web sites, we need to ensure that the engagement features—whether they be games or other approaches—enable what users want and need, and that they are sustainable. Assessing for engagement and other civic interactions, however, is a step toward the kind of transformative civic Web sites that enable knowledge work.

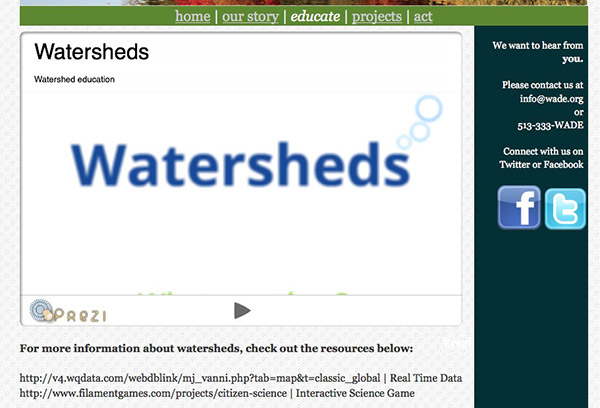

Psychological interactivity as knowledge building. Related to engagement is the notion of psychological interactivity, where interactive features go beyond physical interactivity (e.g., clicking a link or filling in a form) to enabling epistemic work. While engagement focuses on the activity that gives users a sense of purpose and impact, psychological interactivity focuses on creating an interface that enables that activity. For example, for the watershed project, one group of students developed a YouTube contest encouraging users to show activities they engaged in at Acton Lake and Hueston Woods State Park and how they were helping to improve water quality in the watershed. Each month, three videos were chosen and posted on the Watershed Education web site. This approach encouraged users to visit Acton Lake, to explain what they were doing to protect the watershed, and to visit the web site to learn how others were doing the same and to see whether their video was chosen to be featured. Asking users to submit their stories engaged them in a watershed activity and encouraged them to return to the web site. The community partners liked the idea and want to expand it to invite users in state parks throughout the state of Ohio. A critical aspect of the YouTube feature was how the team created a YouTube video of themselves explaining the contest and examples of how users might protect the watershed (see Figure 4).

Figure 4. Incorporating Useful Interactivity through YouTube.

Emotional connection as a catalyst for transformation. Emotional connection and sense of place can be a catalyst for movement and transformation. Simmons and Zoetewey (2012) found that audiences are more likely to engage with issues that they believe are relevant to their lives and that including actual images audiences recognize as their neighborhoods improves this relevance. Along with the citizens in our original study, we recognize place as a potential source of agency. We are not the first scholars to make this observation (Escobar, 2001; Gieryn, 2000; Werlen, 1993). Online place, we argue, is essential for web sites that aid civic engagement because citizens crave a location to do their work collaboratively. Users cultivating technical literacy and doing the strenuous work of parsing and possibly challenging scientific consensus need a place to connect and collaborate. Online place can facilitate collaborative knowledge work.

To evaluate for place on civic web sites requires us to look for dialogic features, such as message boards, wikis, and social media streams, that provide citizens with “meeting areas.” Populating these places with actual images of the community in question also earns high marks. Images of their own towns resonate strongly with citizens (Simmons & Zoetewey, 2012), personalizing the technical issue at hand and creating a sense of emotional connection. Generic, stock photography can imply that the web site is the work of outsiders and possibly out of touch with the community and its concerns.

As one example of emotional connection established via place from the watershed project, we offer a game about Acton Lake and the history of the watershed that suggests fun things to do at the park embedded in each question (see Figure 5). If players miss several of the questions, the game suggests that they visit Hueston Woods to brush up on their knowledge of the park. The quiz itself includes full-screen pictures of Acton Lake and Hueston Woods, providing an emotional connection with those who are familiar with the park and reinforcing the importance of protecting the watershed in the area. Enabling audiences to see how a message relates to their day-to-day lives provides the kind of interaction that encourages more sustained participation.

Figure 5. Creating Emotional Connection to Encourage Action. Image is interactive.

Exploration as enabling change. Enabling change in the community can start with allowing users to explore their interests and inquire on their own terms. Wysocki (2001) argued that designers create a relationship with their audiences and consequently illustrate how (and whether) they value the contributions audiences can make by the way in which the interface is designed. A “generous interface” that allows for self-exploration enables users to pursue their own interests and form their own line of inquiry. Exploration-friendly interfaces respect that the user may have different goals than the designer and the community partner and encourages users to pursue those goals.

As Barbara Mirel (2004) reminded us, complex problem-solving work necessitates “no pre-set entry points or stopping points” (p. 22). Building civic web sites that enable visitors to view technical issues from dynamic vantage points is one means of heeding Mirel’s reminder. Citizens from our previous study wanted to adopt a multi-perspective approach. For the Hueston Woods project, students designed Web sites that supported this multi-perspective, wayfinding approach. Users were free to visit the web site as “fisherman” sometimes, and adopt other identities on subsequent visits, such as “teachers” or “students.”

In addition to adopting dynamic identities, visitors were encouraged to explore existing research with their own dynamic paths. In the example below, a web site development team created a Prezi explaining the importance of watersheds that encouraged a sense of playful wandering through the different paths of the document rather than a linear, pre-determined progression (see Figure 3 and Prezi, linked above).

These aspects of useful interactivity intersect and overlap in ways that together illustrate a consideration for the work users want to accomplish. Evaluating for each in every web site project forces features that should rather organically evolve from usability research with a range of end users. In other words, a checklist insisting on every type of useful interactivity is neither necessary nor productive.

Evaluate for Evidence of Concern for the “Catalytic” Practices and Outcomes of the Project

Good civic web sites must enable activity, movement, and change in a community. This can be action that we associate with activism, or it can be change that we associate with awareness or learning. It is true that a concern with action or change in some way is not exclusive to web sites designed for civic purposes. The pedagogical contexts that tend to produce projects such as this do, however, carry expectations that are different—many of the classes that produce civic web sites are service-learning courses or enact some form of engaged pedagogy in which the hope or expectation is that students and partners are changed from the process.

Change, therefore, is an essential value, and to attend to this value, we have turned to Patti Lather’s (1986) discussion of catalytic validity. In that article, Lather sought to “reconceptualize validity within the context of openly ideological research” and focused on the concept of validity because the concept sits at the very heart of what she called “positivist” conceptions of neutral and objective inquiry (p. 63). Lather correctly noted that openly ideological research must also be sound and defensible research. It must be rigorous as well as relevant. There are a number of types of validity, but the most important for our purposes is “construct validity,” or the ability of a researcher to make inferences between theoretical concepts and the way those concepts are operationalized in a particular study. It is also useful, we think, to reference more colloquial notions of validity as well-grounded, appropriate, and justified. Lather’s problem was how to move beyond a critique of the limited ways that validity is operationalized in (positivist) research and toward new and relevant conceptualizations of validity for engaged and activist forms of research.

For evaluation of civic web sites, there are two “catalytic” variables that need attention in our evaluation practices. First, we must have an appropriate fit between our pedagogical and rhetorical theory and the ways we enact that theory in our evaluation practice, the very problem we take up in this chapter. The second flows from the first and demands new criteria for evaluation. The concept of catalytic validity provides one such criterion. Catalytic validity “refers to the degree to which the research process re-orients, focuses, and energizes participants” through participation (p. 67). In other words, catalytic validity has implications for how students do their work as well as for the work itself. Projects that are catalytic should change things (students, participants, the situation, etc.). Ideally, participants should find themselves in a position in which they are able to better accomplish the work they partnered with us to do. With respect to process, a catalytic web-design project would entail students engaged regularly with participants. One practice that comes to mind is the “member check,” or the necessity of quick feedback moments with key audiences, partners, and stakeholders (this would provide the project with what Lather calls “face validity,” a notion easily paired with catalytic validity). Regardless of the name of the practice, this is a responsibility best pushed (and taught) to students as part of the project and made visible in the work that they make available to us for evaluation. Evaluation of the catalytic value of a web site itself remains a tricky problem that does not have an ultimate solution in a 15-week class. But it is possible to attend to catalytic issues in the process itself, however, via reflective writing and our own quick “member checks” to provide us with indicators of this value in our evaluation practices. It is in the documentation of process in particular, therefore, that we would expect to see indicators of change, an expectation that needs to be clearly communicated to students as part of a transparent approach to evaluation.

HEURISTIC FOR DEVELOPING ASSIGNMENTS AND EVALUATION TOOLS

FOR USEFUL CIVIC WEB SITES

It will remain a challenge to determine usefulness at the point where students need a grade. Our solution is to plan for this moment in the design of assignments, to provide concepts to students that shape their approach to participants and to their project, and to expect certain practices from students. In this way, we try to make sure our assignments speak to productive usability and catalytic validity from the beginning, and we check for evidence in the processes used by students (if not a tangible product) when evaluation becomes necessary. We can’t guarantee usefulness to our partners, but we can build our assignments and assessments so that we’ve done all in our power to create circumstances that enable success for students in the near term and support usefulness down the road.

Our approach to the evaluation problem posed in this chapter is to begin addressing the problem early and to let the processes of the assignment, including any formative assessment provided, carry as much of the burden as possible. In many ways, we are arguing for well-understood assignment design practices. Even so, we intend this chapter as a heuristic for helping teachers design assignment and evaluation approaches for working with civic web site projects. The work that is perhaps new and useful are the values and concepts for assignments and for evaluating the work that students produce in response to those assignments. In our own practice, however, we also focus more concretely on what we have students do, make, and write as part of projects such as this and also on what we look for (indicators) in that work as visible traces of the concepts and values we teach and expect student projects to express (see Table 3).

Table 3: Evidence and Indicators of Concepts and Values in Projects

| Evidence | Indicators | |

| Alternative Use | Report Research Design (plans) Research Memos (results) Project Logs Final Product(s) |

|

| Technical Literacy for Inquiry | Report Final Product(s) Usability Evaluations |

For example, the web sites that included a Frequently Asked Questions section or self-directed Prezi that introduced users to watershed terms and language and how to interpret the significance of changing water temperatures. |

| Useful Interactivity | Report Final Product(s) Interactive Features Usability Evaluations Reflective Writing |

For example, the web site that invites users to post video of themselves protecting the watershed of Acton Lake. |

As Table 3 shows, there are a number of artifacts that we have students produce that we expect to supply indicators that we value with regard to useful civic web sites. There are also artifacts that we understand to be limited with regard to what they can reveal. But what we wish this table to express is that some of these artifacts are expected to capture issues of process that are truly difficult to capture. Therefore, as teachers, it is essential to be clear with students regarding the values of the process (usefulness and change), in how these artifacts are to capture process, and in how we are therefore going to read them. Of critical importance in this regard is the need for civic web site projects to be research-intensive, for those inquiries to be well-planned and documented, and for that work to play an important role in design decisions and in what is ultimately produced as products for users. All told, the key with civic web sites is to move beyond asking “how easily can the Web site be used?”—the question at the center of conventional usability—to ask, instead, “how useful is this Web site?” This question animates productive usability and certainly increases the challenges for evaluating civic web sites. We hope this chapter will help teachers meet that challenge.

![]()

APPENDIX

Online Design Project Assignment Sheet

Although principles of good design can be applied to all document design projects, designing effective online projects requires additional strategies. Accessing, assembling, and making use of information online is more complex than it is in print, and online design must accommodate these differences. The goal of this project is to provide you with experience critiquing, planning, and designing professional-level online material. Specifically, you will be developing a web site for Hueston Woods State Park that specifically targets K–12 students, their teachers, and potential donors. Although we will be working as a class (and small teams) on many aspects of web site development, you will each be responsible for completing a web critique, developing a design mockup, creating a site diagram, and developing sections of your group’s web site on your own in Adobe Dreamweaver; because this is a group project, everyone must use the same, most recent version of Dreamweaver.

Components of the Project

Before you begin coding your web site, you need to complete at least three planning activities to help you determine appropriate design options for this particular context. These planning activities include a web critique, a design mockup/wireframe, and a site diagram.

Web critique

You must first determine what makes an effective web site for the type of organization with which you are designing the site. Examining a range of similar sites will help you begin to understand the categories, features, tone, and design appropriate for a specific context. By investigating existing web sites, you will develop a list of criteria you believe should be included in your web site. This examination and resulting criteria is important as you design a web site because often neither the client nor the designer is able to think through the different possibilities that exist for displaying the same type of information. This critique should provide you such a range of possibilities as well as an understanding of which ones work well in that context. The web critique will be based on a class discussion and handout.

Design Mockups/Wireframes

Based on your web critique, readings, design principles, and class discussions, you will individually develop on paper a potential design for the web site. The mockup should illustrate site architecture and page layout (page grid), including specific colors, link names, banner, and navigation location.

Site Diagram

Sketching an organizational diagram of the web site can help you think about how to divide the information into appropriate pages with appropriate relationships to other pages. The site diagram also encourages you to consider the terminology you use for each page. An example of a site diagram is included in the “developing effective civic web sites” slideshow presentation linked to the class schedule.

Web Site Development

In small teams, you will examine your web critiques, mockups, and site diagrams to plan and design a web site for the Acton Lake Watershed Education Center. As a class, we will discuss strategies for effective team work.

Usability Test/Usability Test Plans

As a team, you will develop a set of usability test plans specifically for your design. (We will ask the participants the questions verbally in face-to-face usability testing.)

Recommendation Report

As with the print project, you will write a short recommendation report to the organization explaining your design decisions. You will write the report collaboratively with your team. The purpose of the report is to persuade your client that your design best meets their purpose and users’ needs.

![]()

REFERENCES

Grabill, Jeffrey T. (2007). Writing community change: Designing technologies for citizen action. New York: Hampton Press.

Gieryn, Thomas F. (2000). A space for place in sociology. Annual Review of Sociology, 26, 463–496.

Kinsella, William J. (2004). Public expertise: A foundation for citizen participation in energy and environmental decisions. In Stephen P. Depoe, John W. Delicath, & April Marie-France Aepli Elsenbeer (Eds.), Communication and public participation in environmental decision making (pp. 83–95). Albany: State University of New York Press.

Kolowich, Steve. (2010, August 4). No laughing matter. Inside Higher Ed. Retrieved from

http://www.insidehighered.com/news/2010/08/04/websites

Lather, Patti (1986). Issues of validity in openly ideological research: Between a rock and a soft place. Interchange, 17 (4), 63–84.

Manovich, Lev. (2002). The language of new media. Cambridge, MA: MIT Press.

McGonigal, Jane. (2011). Reality is broken: Why games make us better and how they can change the world. New York: Penguin Books.

Mirel, Barbara. (2004). Interaction design for complex problem solving. San Francisco: Morgan Kaufmann.

Reich, Robert B. (1992). The work of nations: Preparing ourselves for 21st century capitalism. New York: Vintage.

Simmons, Michele, & Grabill, Jeffrey T. (2007). Toward a civic rhetoric for technologically and scientifically complex places: Invention, performance, and participation. College Composition and Communication, 58 (3), 419–448.

Simmons, Michele, & Zoetewey, Meredith W. (2012). Productive usability: Fostering civic engagement in online spaces. Technical Communication Quarterly, 21 (3), 251–276.

Werlen, Benno. (1993). Society, action and space: An alternative human geography. London: Routledge.

White, Edward M. (1999). Assigning, responding, evaluating: A writing teacher’s guide (3rd ed.). Boston: Bedford/St. Martin’s.

Wynne, Brian. (1991). Knowledges in context. Science, Technology, and Human Values, 16 (1), 111–121.

Wysocki, Anne Frances. (2001). Impossibly distinct: On form/content and word/image in two pieces of computer-based interactive multimedia. Computers and Composition, 18, 137–162.

ACKNOWLEDGEMENT

A special thanks to the Fall 2011 Visual Rhetoric class at Miami University. The web sites included in this article were designed by Taylor Gibbs, Rachel Lanka, and Nicole Thieman; Allie Griebel and Cassie Callan; Jennifer Lenoard, Allison McGilivray, and Brittany Molnar; Andy Buchner, Paul DeNu, Emily Ryan and Reid Wegner.

![]()

Return to Top

![]()